Blog

‘This Is Just How We Do Things Now’: The Quiet Collapse of Standards

By Jay

Series: Leadership

Tags: organizational-behavior, leadership, systems-thinking, case-studies

Most cultural disasters don’t start with a bang. They start with a whisper.

A company has a policy: all code must be reviewed before deployment. Anyone who works in tech knows this drill. Despite the grumbling about “slow processes,” it’s a good policy that helps reduce bugs, improve quality, and share knowledge.

But one day, a team is under pressure to ship. They’re running late, and (as far as they are concerned) the code is solid. So they decide to skip the review, “just this once.”

The deploy goes smoothly. Bugs are minimal, the feature is a hit, and the executive sponsor is thrilled.

You can see where this is going. The next time they’re under pressure, they skip the review again. And again. And again. Before long, skipping reviews is routine.

So, what happens when something does go wrong? Is it viewed as a policy failure or a process breakdown? Nope. It’s just bad luck.

The scary part is that nobody remembers when skipping reviews became the norm. It just feels like how things have always been done.

We have a name for this: Normalization of Deviance. This is how high performing teams start shipping junk. It’s how great engineers burn out. It’s how safety nets become decoration.

What is “Normalization of Deviance”?

The term “Normalization of Deviance” was coined by sociologist Diane Vaughan, who studied the 1986 Challenger disaster. NASA engineers knew the O-ring seals were prone to failure in low temperatures. But because previous launches hadn’t failed catastrophically when the O-rings eroded, they started treating that erosion as normal.

They didn’t forget the risk. They just got used to it.

That’s the core of normalization: when a deviation from standard behavior doesn’t cause an immediate problem, it feels less and less like a deviation at all. Eventually, it becomes the new normal — even if it’s unsafe, unsustainable, or unethical

For those of my generation, watching Challenger disintegrate on live television is a vivid memory. It’s also a textbook example of how normalization of deviance can lead to catastrophic failures. But it’s not just about space shuttles or engineering disasters. It’s about how teams and organizations slowly drift away from their original standards, often without even realizing it.

Not to Be Confused: Deviancy Spiral vs. Normalization of Deviance

Let’s clear up a common mix-up. The Deviancy Amplification Spiral describes how societal reactions to deviance can escalate that deviance. It’s about external feedback loops and how they can amplify deviant behavior. This is frequently seen in crime, moral panics, and subcultures. For example, when a community reacts strongly to a perceived deviant behavior, it can lead to more deviance as people push back against the perceived injustice or overreach. (Wikipedia)

By contrast, Normalization of Deviance is an internal process. It’s about how standards quietly erode — not because of pressure, but because of silence. It’s not about reacting too harshly to deviance; it’s about not reacting at all. Silence becomes acceptance, and acceptance becomes the new norm.

| Concept | Deviancy Spiral | Normalization of Deviance |

|---|---|---|

| Trigger | Media/political overreaction to deviance | Internal tolerance for breaking norms |

| Feedback Loop | Escalates due to backlash and policing | Escalates due to absence of consequences |

| Context | Crime, moral panic, subcultures | Safety, process discipline, engineering |

| Driver | Society and systems | Teams and organizations |

What Does It Look Like?

Normalization of deviance rarely announces itself. It shows up under the radar, in the cracks of a team’s culture. It’s also rarely a single event. It’s a slow drift, fueled by small decisions that seem harmless at the time.

Take testing, for example. A team’s got a deadline, and writing tests slows things down. Someone suggests, “We’ll add them later,” and no one argues. (The fact that most companies minimize or ignore the importance of testing in hiring and promotion is a topic for another day.) The feature ships, nothing explodes, and the exception is now the rule. The next time, skipping tests feels normal. Eventually, writing tests isn’t just rare, it’s weird. It signals you don’t “move fast.” The team doesn’t consciously reject quality; it just silently moves on.

An even more insidious example is how teams handle toxic behavior. A developer who has a history of delivering high-impact features but who consistently ignores feedback, interrupts others, belittles colleagues, and dominates discussions is a classic case of normalization of deviance. It’s not that anyone approves of the behavior; it’s just easier to accept when the results are good. Behavior that would have been called “abrasive” or “disruptive” becomes quietly rebranded as “passionate” or “driven.” The team’s standards quietly drop.

Then there’s burnout. A team member works late nights, pushes through weekends, and ships a critical feature. Managers praise their dedication and commitment. Unfortunately, this sets a precedent. Others feel pressured to do the same, equating long hours with value. The team’s culture shifts from “work-life balance” to “work-life what?” Over time, the expectation becomes that everyone should be willing to sacrifice personal time for the sake of the project. The team’s standards for work-life balance quietly drop.

It is important to note that normalization of deviance is not always a conscious choice. It often starts with well-intentioned decisions, like prioritizing speed or meeting deadlines. But over time, these decisions accumulate, and the team’s standards erode.

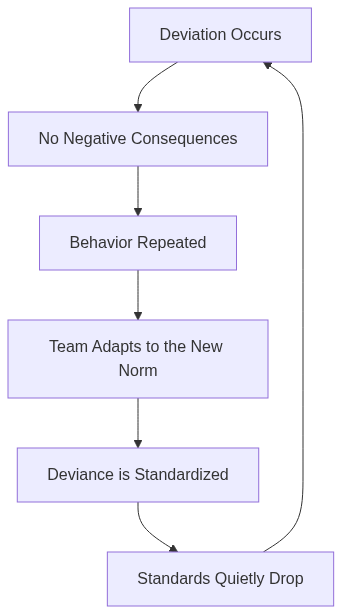

Diagram: How Normalization Works

Some Examples

Facebook: Breaking Things and Maybe Moving Fast?

In its early years, Facebook adopted the mantra “Move fast and break things,” encouraging rapid development and deployment. This approach prioritized speed over thorough testing and oversight. Over time, this led to the normalization of bypassing standard procedures, contributing to issues like privacy breaches and the spread of misinformation. Despite internal warnings, the company often addressed problems only after public exposure, exemplifying how deviant practices became standard operating procedure.(Mind Matters, The New Yorker)

Uber: It’s Tough to be a God (View)

Uber developed an internal tool known as “God View,” allowing employees to track the real-time locations of customers. Initially intended for operational purposes, the tool was misused by employees to monitor individuals without consent, including high-profile figures. This misuse became normalized within the company culture until it was publicly exposed, leading to investigations and policy changes.(Pitchfork)

Github: Running on Technical Debt

In GitHub’s early growth phase, speed was everything. Engineers were shipping features rapidly, and in that rush, practices like unit testing, code review, and thorough code documentation often took a back seat. At first, this was seen as a reasonable tradeoff — the team was small, velocity was paramount, and nothing broke catastrophically. But as GitHub scaled, the early shortcuts became entrenched. New hires inherited a sprawling Ruby codebase with minimal guardrails, and by then, the pain of retrofitting good practices felt insurmountable. What started as “we’ll fix it later” quietly evolved into “this is just how it works here.” The normalization of technical debt became so complete that even internally, engineers described the codebase as intimidating and brittle. It wasn’t just a legacy system — it was a legacy mindset. (Zach Holman, GitHub founding engineer)

Phantom Pager Syndrome: Alert Fatigue

In many environments, particularly at scale, teams configure alerting systems to monitor infrastructure and application health. Initially, alerts are set with good intent — catch failures early, prevent downtime. But as incidents pile up and on-call fatigue sets in, the alert definitions grow noisy. Engineers start adding alerts “just in case,” and suddenly, the pager is firing for every minor blip. Because most alerts don’t signal real problems, teams begin to ignore them — or worse, silence them altogether. The feedback loop is complete: alerts exist, but no one responds. When a real failure does happen, it gets lost in the noise. What began as a safety net became a liability. This is normalization of deviance at a systems level — where the infrastructure is doing what it was told, but no longer what anyone needs. (PagerDuty State of Incident Response Report)

Oil and Water: Deepwater Horizon

Prior to the 2010 Deepwater Horizon oil spill, BP and its partners routinely overlooked safety protocols and warning signs to maintain production schedules. This culture of complacency and the normalization of cutting corners led to one of the worst environmental disasters in U.S. history. Investigations revealed that the company had a history of safety violations and that employees often felt discouraged from reporting unsafe conditions.(Vanity Fair)

Employees Must Wash Hands?

Hand hygiene is a fundamental practice in preventing hospital-acquired infections. Despite this, healthcare workers may skip handwashing due to time pressure or a false sense of safety. When such behavior goes unchallenged and no immediate adverse outcomes occur, it becomes normalized, potentially leading to widespread health risks.(AJIC Journal, CDC)

It’s All Good: Checklist Compliance in Aviation

In aviation, strict adherence to pre-flight checklists is crucial for safety. However, experienced pilots sometimes skip steps (or entire checklists), believing their expertise compensates for procedural lapses. Over time, this behavior can become normalized, increasing the risk of accidents. Studies have shown that such deviations from standard operating procedures, when uncorrected, can lead to serious incidents. (Wikipedia, Wikipedia, Wikipedia)

Summary Table

| Sector | Deviation Tolerated | Normalization Result | Consequence |

|---|---|---|---|

| Tech | Deploying without review | No tests, no QA | Security/privacy failures |

| Tech | Using internal admin tools | Abuse of user data | Loss of trust, lawsuits |

| Tech | Skipping typing/tests in codebase | Technical debt becomes systemic | Slower dev cycles, brittle systems |

| Tech | Adding noisy alerts without triage | Alert fatigue, important signals ignored | Outages missed, reduced reliability |

| Aerospace | Ignoring O-ring erosion | “No failure = no risk” thinking | Shuttle explosion |

| Energy | Skipping safety checks | “Speed over safety” culture | Environmental disaster |

| Healthcare | Skipping sanitation steps | Handwashing becomes optional | Infection spread |

How to Fight It

There isn’t a simple solution to normalization of deviance. It’s a complex, insidious process that creeps in under the radar. The best first step is to identify it early. This is a situation where new team members can be invaluable, and many times a simple “why do we do it this way?” can be the shakeup needed to break the cycle.

Don’t be afraid to ask the “dumb questions” - in fact, encourage them. Then listen carefully to the answers. If you hear one of these phrases, you may have an opportunity to interrupt the process and break the cycle:

- “We’ve always done it this way.”

- “It’s not a big deal.”

- “It’s worked fine so far.”

- “It’s not worth the effort to change it.”

When you hear these phrases, it’s a sign that you need to dig deeper and force a conversation. These phrases are justifications for a behavior that has become normalized. They signal that the team has stopped questioning their own practices, and that’s where the real danger lies.

Most problems don’t start with loud failures. They start with polite silence. When team members shrug off concerns or accept subpar practices as the norm, it’s a sign that normalization of deviance is taking hold.

Most importantly, lead by example. Take pains to recognize not just the wins, but the behaviors that lead to them. Praise the team member who insists on writing tests, even if it slows down the release. Acknowledge the engineer who points out a potential risk in a deployment, even if it means delaying the launch. Model the behaviors you want to see, and make it clear that they matter.

Final Thought

Most disasters, be they cultural, technical, or ethical, don’t come from villains or saboteurs. They come from teams of good people, slowly teaching themselves that the rules don’t matter anymore. And the worst part? It usually feels fine until it doesn’t.

If your organization feels like it’s getting faster but messier, more “efficient” but less resilient, stop and look closer. You might not be accelerating. You might just be falling — slowly, silently, in a direction no one intended.

And if nothing’s broken yet?

That’s exactly how normalization works.

Related Reading

- Systems Thinking: Systems and the Cobra Effect - How well-intentioned solutions can create unintended consequences

- Leadership: From Line Cook to Chef - How experience gaps lead to systematic failures in technical roles

- Communication: Breaking Down Silos - Crew Resource Management principles for preventing organizational failures

- Accountability: Leaders Who Fail Up - When leadership failures get rebranded as success stories

- Technical Systems: Drowning in Beeps - How alert fatigue creates its own normalization of deviance